At the deep learning summit conference in London, we thoroughly enjoyed Deepmind. Deepmind regularly makes the media and their most famous feat is Alpha Go. This blog post is about the current research of Raia Hadsell. She is attempting to push the limits of AI navigation through reinforcement learning combining reinforcement learning with deep neural networks. The end goal is an end to end AI system that can handle complex navigation purely based on vision. Crucially, the generic knowledge/intelligence must be transferable via transfer learning creating a generic navigation mechanism. This model would have the following structure: Sensors → Perception → Model of the world → Planning → Action Combining reinforcement learning with deep neural networks is an area where Deepmind is among the absolute best in the world in terms of research. This technique has already proven to work very well in contexts such as computer games (Atari) and board games (Alpha Go) but if we want to apply this to real-life problems such as robot navigation there are obstacles:

- Can a deep reinforcement learning agent learn different navigation tasks? In other words, can one generalize navigation?

- Can a deep reinforcement learning agent apply efficient navigation because it is essentially a brute-force method?

Can a reinforcement learning agent learn from real data ipv simulated data from a controlled environment? The problem context of navigation is challenging and the following questions give an idea of the complexity:

- Where am I?

- Where do I go from here?

- Where did I start?

- How far is X from Y?

- What is the shortest path from X to Y?

- Have I already been here?

- How long will it take me to get to final goal?

The research so far has looked at maze navigation where the reinforcement agent has to find the end point. One varies here by using different mazes and/or by changing the start/end location.

In the first attempts with a traditional deep RL agent took a long time to obtain a result, and there was little intelligent use of memory or efficient behavior. This traditional architecture used a convolutional neural network to process pixel perception in combination with a standard deep RL agent. The research team then modified the architecture by using an LSTM cell in the architecture and this 2nd baseline model was an improvement, but the result was certainly not acceptable.

The idea behind it is that the LSTM cell gives the network a memory unit. In the next step, they came to the curious conclusion that the system works better if it is allowed to predict the depth of the input itself while initially using it as input to improve the 2nd baseline model.

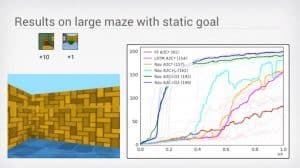

Finally, we added a 2nd LSTM cell and the speed of the agent also became an input variable.This gives the following impressive results in a large maze where the final model is represented as the blue line. We see that this architecture increases very quickly in terms of results in far fewer iterations than the previous architectures and this indicates efficient use of memory and smart behavior.

The result in itself is impressive, but is still very research-oriented since the mazes were artificially created and the tests only show results on artificial RGB data. The next logical step for Deepmind was mapping on real data, and it helps when your parent company is called google and a service like street view in its arsenal. This makes the context realistic but also more difficult because the maze structure (which the algorithm does not see) becomes more complex.

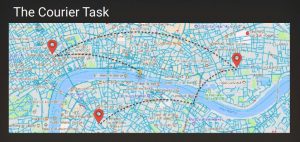

In addition, the RGB images are much more complex and have more noise. The deep reinforcement learning agent got into this street view world the courier task task. He must navigate from place to place in London in an optimal manner. The reward increases gradually as he approaches 400 meters from the goal. London was chosen because the test is comparable to the famous London cab driver exam.

This test is considered 1 of the most difficult tests in the world and the people who pass it study for years. They must cover the entire landscape of 25,000 streets with point of interests by heart. The result is impressive because the deep reinforcement learning agent is able to use memory efficiently and build an intelligence around navigation where the agent is able to navigate in areas never seen.

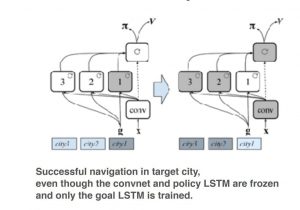

Aspects such as the location of rivers, tunnels, etc and interpretation of those images to then make smart choices play a role in this. Because of the 2 LSTM cells in the architecture, one can transfer the navigation concept to other cities in the world by training only 1 LSTM cell on the new data. Transfer learning thus makes the model usable in any city.

This research is part of the strategy of Deepmind where they are developing powerful general learning algorithms that benefit humans. They seek to remove AI from the narrow AI context and set the path toward AGI open. Google paid $500 million at the time for the company which offers no product or service and this seems to be yet another smart acquisition by the giant.