This post is based on Àgata Lapedriza's talk atthe Deep Learning Summit in London.

Àgata Lapedriza is a professor at the Open University of Catalonia and a visiting researcher at MIT. Lapedriza does research on recognizing emotions in photos and videos. This research immediately caught my interest since I did similar research on emotions during my internship (before joining Continuum).

Emotions

Research on recognizing emotions may seem unusual to some but it is not at all. There are several things that could improve should one be able to recognize emotions accurately, for example educational applications. These could suggest certain tips or exercises based on the emotion (e.g., enthusiasm or confusion) of the user. But also in the healthcare sector, such systems could be used to recognize patients' emotions and take the necessary actions.

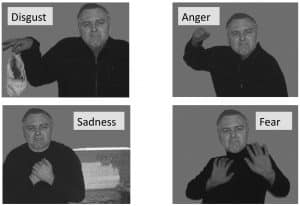

Most methods to accomplish this attempt to analyze the expressions of the face in photographs. Other studies focus on the shoulders or posture of the body. Unfortunately, these methods often fall short. What emotions does the man in the photo on the right have? The ability to recognize emotions in other persons is already not always easy for us. But what if more information was available?

The pictures above show how context can change emotion. All four faces are identical but the extra information in the photo makes it easy for a person to recognize the emotion.

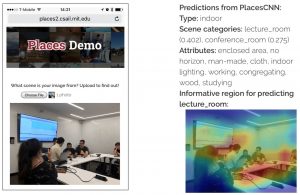

To determine this context, a dataset of pictures annotated with the type of place (e.g., a classroom) and various attributes was collected. A CNN(Convolutional Neural Net) was also used by Lapedriza to determine place and attributes.

Places

Armed with the method of determining emotions using facial expressions and the PlacesCNN, they could begin to improve the existing systems. There was only one problem: Labeled data.

To recognize the emotion of individuals in photographs using context, they had to have examples. They did this through the following steps:

1. Scraping photos from search engines and existing research datasets

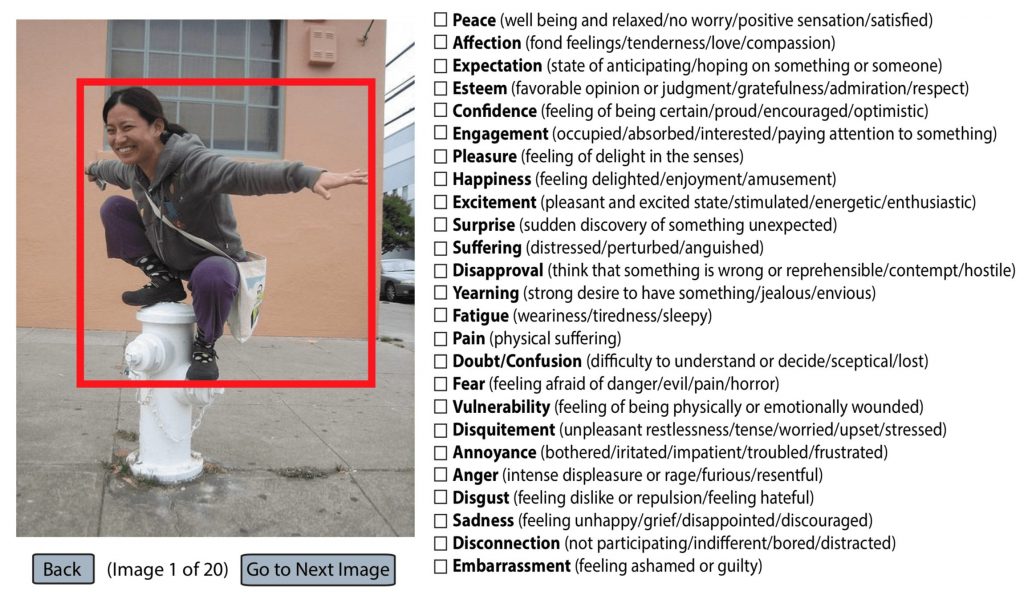

2. Create a tool for annotating the photos

3. Use crowdsourcing to annotate as many photos as possible

More info on the Places Demo can be found here.

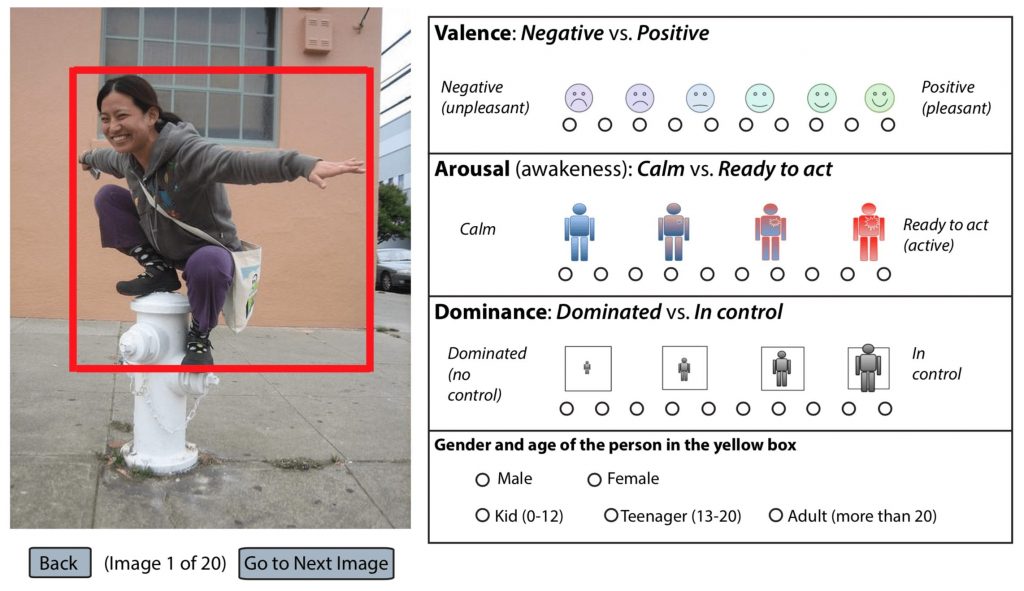

VAD Emotional State Model

The tool shown above allows the user to choose between 26 emotions for the indicated person in the photo. There is also the ability to provide additional metadata. This metadata is based on the "VAD Emotional State Model". This model describes emotions through 3 numeric metrics: Valence (how pleasant the situation is), Arousal (how excited the person is) & Dominance (whether the person is in control or not). These three metrics provide more information about the emotions. Gender and age range are also tracked. With all this information, an EMOTIC dataset was built with 23,571 annotated photos showing 34,320 people.

This dataset is available for free download.

The next step was to combine the Places and EMOTIC datasets to determine emotion and VAD metrics.

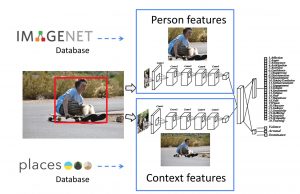

This system uses two CNNs, one that is pre-trained on the imagenet dataset (collection of photos of objects) and a second that is pre-trained their own places dataset. The first CNN takes a smaller version of the photo as input showing the person whose emotion is to be determined. The second CNN gets the entire photo to detect features of the context. The output from these CNNs is then combined to take information from both systems and make a prediction.

This figure shows that for all emotions except "esteem," the combination (B + I) of the person - (B) and context network (I) scores better than the networks separately.

After the presentation, of course the big question was, "What about video?" and of course Lapedriza and her team are already working on this!

Also be sure to read the original paper. It is easy to understand and very interesting. Do you want to know more about machine learning or AI in general? Then keep an eye on our website for our events? Or join our tribe!