Recently, Kristof and Nico gave the workshop "Optimizing the order intake process through Artificial Intelligence (AI)" at VOKA. Since there was a lot of interest in that topic, they are also sharing their expertise through this blog post.

What does the order intake process actually look like?

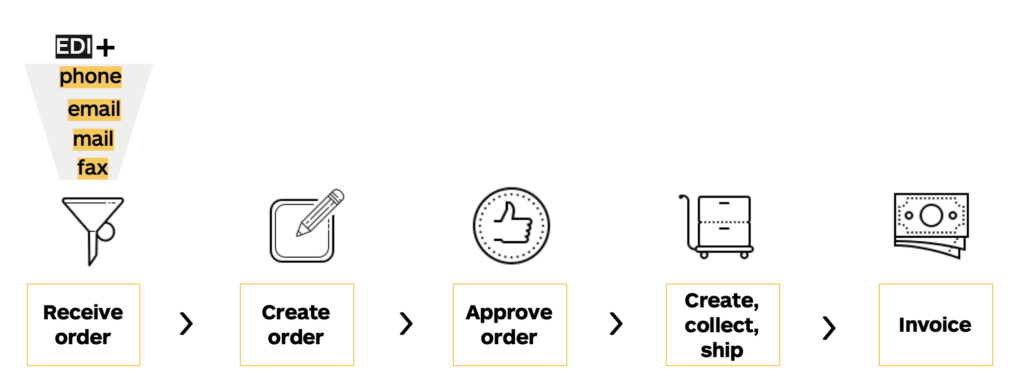

The traditional order intake process consists of five steps.

In the first step, messages come in through various channels. Often multiple communication channels are used within the same company. For example, a message may arrive automatically via Electronic Data Interchange (EDI) or manually in a mailbox or over the phone. Once such a message has been received, the customer's intention must first be analyzed and identified: is it an order, a quotation, a complaint, ... ?

Once an order is identified, it can be created (step 2).

If it is a complex order, before it can be confirmed, it must first be approved by a supervisor or manager (step 3).

Once the order is approved, it can be collected and sent (step 4).

As a final step, the invoice is sent to the customer.

There are many actions in that process that can be optimized, such as scheduling order picking, improving delivery routes, automatically creating an order based on an email, ...

In late 2018, those optimizations were examined by the American Productivity & Quality Center (APQC). That study examined a large number of U.S. companies that had already optimized that process.

From that, the APQC discovered several benefits, such as:

- Orders get up to $15 cheaper

- Orders are processed 46% faster

- The margin of error is reduced

- Customer satisfaction increases

This blog post delves into one of the first steps of the order intake process: identifying the intent of a message.

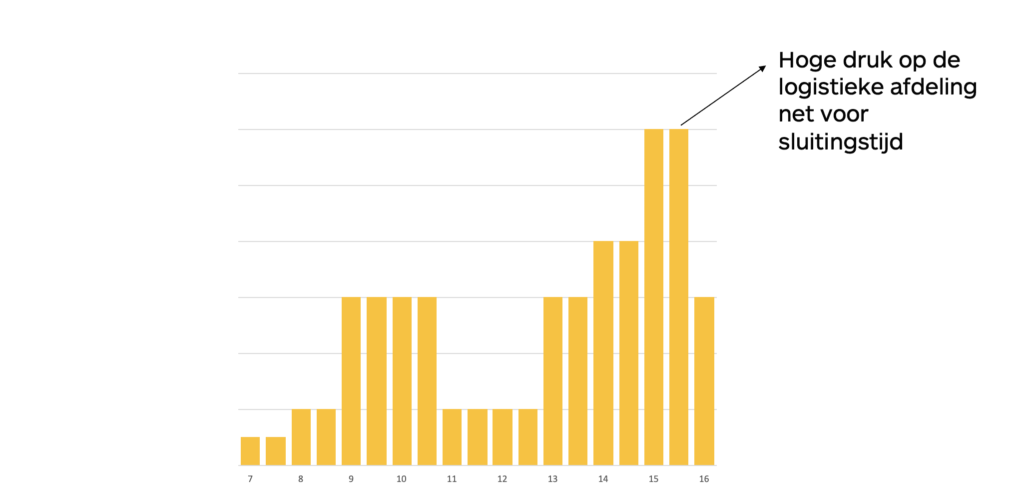

Multiple types of messages arrive in an employee's mailbox on a very short period of time, making manual systems unable to process that data and falling short. The employee managing this mailbox sequentially goes through the emails and handles them . Since that person before reading the message does not know what type of message it is about, it is difficult to prioritize and important messages can sit for a long time. The employee may also not check his or her mailbox until late afternoon and quickly enter orders before the logistics department closes.

This leads to an excessive workload for the logistics department at the end of the workday.

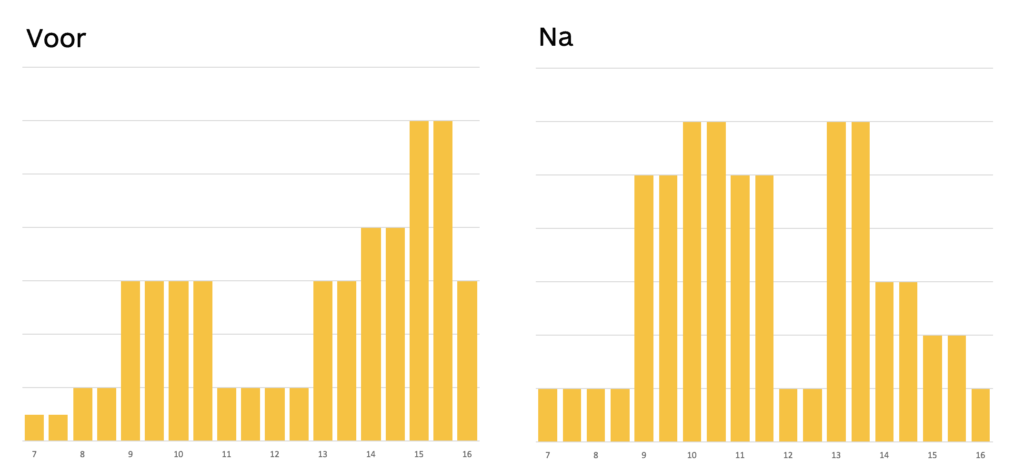

From the graph below, that problem can be clearly deduced. The graph shows that there is still a large mass of orders being entered at 3 p.m. (The graph was created from an analysis of a customer's order entry)

How can it be ensured that employees can prioritize and orders are not left lying around?

This can be reduced by AI and applying a classification strategy.

By solving the question "What is the purpose of this message?", business rules are set up that can be used to automatically prioritize the incoming messages or even to automatically answer certain messages (such as tracking info request).

That strategy is not so easy to build because the messages can come in through multiple channels, be written in different languages, contain attachments, ... Of course, it is also important to be able to do that in and as performant way as possible since other optimizations might be implemented afterwards.

The technical implementation of that strategy consists of the following three steps:

-

- Create a pdf file of all attachments/fax

- Use the Google Cloud Vision Service for text extraction

- Use the Deep Learning NLP model for classification

Since many different formats are used in the attachments and it is impossible to implement readers for all those different formats, it was decided to read all attachments with OCR. As a software stack, open source software is always preferred by the Continuum Crafters.

Stack:

-

- Python

- Tensorflow

- Docker

- Elastic Search

Hosting is done through the Google Cloud Platform but the architecture is built so that the software can easily be moved to another cloud provider.

Deep Learning NLP model?

Before we can delve deeper into the Deep Learning NLP model used, other concepts are elaborated for clarification.

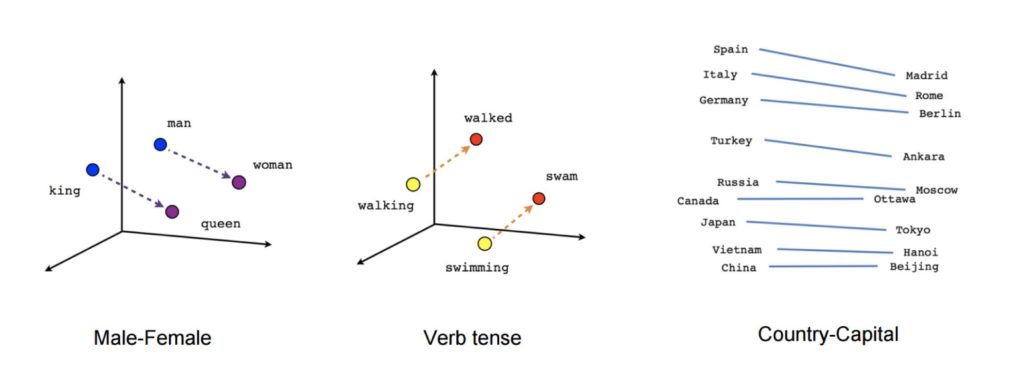

Word embeddings

To unleash machine learning on a text, "embeddings" are made of the words. Such an "embedding" summarizes the meaning of a word in a vector of a chosen length. That meaning is extracted from the surrounding words of the sentences in which it appears.

Through the operation of algorithms, a language model is built and calculations can be done on words. The image above outlines such a language model, the words husband and wife have the same relationship as king and queen.

Convolutional neural networks (CNN)

A CNN is a type of neural network that specializes mainly in image processing. A CNN is built primarily to process data with high dimensionality. For example, an 8k image has input points of 7680x4320x3 (width x height x RGB) and standard neural networks do not get that size processed. CNN architectures create from an 8k image a smaller easily understood representation that loses little or even no important information making it possible to process the image with today's hardware.

Such a CNN usually consists of 3 parts (see illustration):

-

- Processing to more understandable representation (convolution + pooling layers)

- Standard neural network (fully connected layers)

- Classification

To train a neural network so that it can recognize animals, for example, a large data set must be available of pairs of images of animals along with the animal (labeled data). Those images are then fed to the CNN and every time it recognizes something wrong (input is a picture of a bird but it recognizes a donkey), the network is punished. If it would recognize the bird correctly, that network gets a feather ( = training network).

By doing that enough, after a while the network is going to make little to no mistakes and always recognize the right animals.

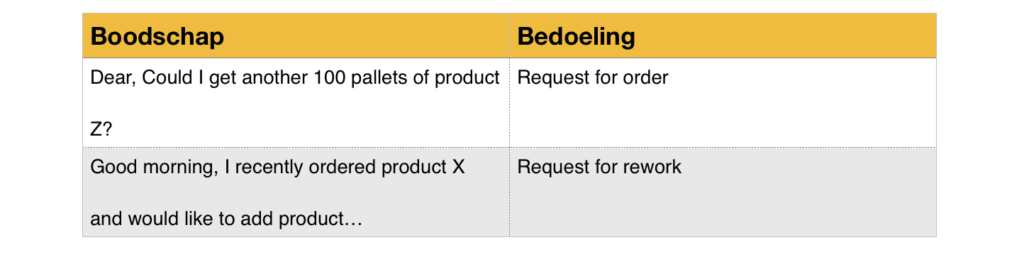

Tagged Data

To train a network and make it ready for use, as described above, you need labeled data. To optimize the order intake process, the data from that process can be tracked by the BPM system. This quickly provides enough data to train a neural network. That data consists of pairs of messages and their intent, for example:

Implementation

The model used is based on the paper "Convolutional Neural Networks for Sentence Classification" by Yoon Kim (2014) (Kim, 2014). In that paper, Kim explores how CNNs can be used for text classification. However, as described above, CNNs are not made to process text. Fortunately, text can be transformed into a format that can be used as input for a CNN. This is done through "word embeddings." In the paper, Kim presents several variations of that model for providing "embeddings."

Since the vocabulary involved in such an order intake process is very specific and can include dialectically tinged words, to train the neural network, specific embeddings are established and learned. These are learned by the architecture itself based on the CNN multichannel method discussed in the paper.

Once the text has been transformed into a representation that the CNN architecture can handle and the correct intentions of each message have been extracted from the BPM analytics, the CNN can be trained.

Benefits of optimizing the order intake process.

In a controlled test environment where an employee views and identifies emails with attachments nonstop, analysis of them reveals that person may be negligent. For example, certain attachments are not read or the body of the email is passed over too quickly. That results in a 98% accuracy rate.

When that same case is repeated by the model, it results in 99% accuracy. Also, the model only takes 54 milliseconds while an average employee takes 9.4 seconds to identify the intent of an email. That may seem quick, but that result was recorded in an ideal testing environment where the employee is only engaged in that task.

The total time from getting a message in to starting the creation of an order is almost cut in half using the model. From 41 minutes without optimization to 21 minutes with optimization.

Orders are also more evenly spread throughout the workday and forwarded to the logistics department, eliminating the peak in that department at the end of the workday (as shown in the graph below).

During the workshop, Nico and Kristof also discussed other business cases to which AI can be applied, such as workload optimization and dynamic pricing.

If you want to know more about any of those use cases or about implementing the automatic order intake process, feel free to contact us!

Nico Petrakis

Software Crafter

Nico Petrakis holds a master's degree in Artificial Intelligence from the University of Maastricht. Currently Nico is working at J&J where he strengthens the data science team with his knowledge of data visualization. Nico also collaborates on several internal research projects on AI.